Nikon COOLPIX L840 review

-

-

Written by Ken McMahon

In depth

The Nikon COOLPIX L840 is a super-zoom with a 38x optical zoom lens and a 16 Megapixel CMOS sensor. Launched in February 2015, it’s an update to the hugely popular COOLPIX L830 which was launched a year earlier and remains in the COOLPIX line-up for now. The new model extends the zoom range from 34x to 38x with all of the extra zoom power at the telephoto end providing an equivalent range of 22.5mm to 855mm.

The 16 Megapixel sensor resolution is unchanged and the L840 sticks with the earlier model’s 921k dot flip-up (and down) LCD screen – no surprise as it’s still a very advanced screen for this class of camera. As before, the L840 is powered by 4 AA batteries but the battery life has been extended and you can now get a very generous 740 shots from a set of NiMH cells. The other major update is Wifi; as well as built-in Wifi the COOLPIX L840 is equipped with an NFC chip, so you can start up a Wifi session by tapping it with your NFC-capable smartphone.

A longer lens and built-in wifi certainly makes the L840 a more attractive option than its predecessor, but is it enough to keep pace with the competition? Here I’ve compared the COOLPIX L840 alongside Canon’s SX410 IS and SX530 HS; the SX410 HS is similarly priced and the SX530 HS costs a little more. Read on to find out if the COOLPIX L840 is the best budget bridge super-zoom, or whether Canon has more to offer you.

Nikon COOLPIX L840 design and controls

The COOLPIX L840 is a little larger all round than the model it replaces, and it weighs a little bit more. Factor in the four AA batteries and a card and you’re carrying 538 grams. So if you’re looking for something that’s compact and light, the L840 isn’t really it. It’s a tiny bit smaller then the PowerShot SX530 HS, but it weighs nearly a hundred grams (three and a half ounces) more.

If it’s a lightweight compact bridge super-zoom you’re looking for, the PowerShot SX410 IS is the one to go for. It’s significantly smaller and lighter than the other two models, weighing a mere 313 grams.

Minor modifications aside, the COOLPIX L840 looks very similar to its predecessor. The slightly larger body allows for a little more space between the controls and the grip which is covered in a soft textured material is very comfortable. The soft curves of the older model have been re-contoured to a more boxy outline and the front and right side of the top panel are now raised slightly. There’s a new Wifi button on the top panel and the second zoom rocker on the lens barrel is joined by a new Snap-back zoom button which I’ll explain in the Shooting Experience section below.

On the rear panel the control layout is as conventional as it gets. The four-way controller, or multi-selector a Nikon calls it is used to navigate the menus and for one-touch access to flash, exposure compensation, macro mode and the self-timer. Flanking it are four buttons, two at the top for shooting mode and playback, with menu and delete buttons below.

The screen sticks out quite a long way from the back panel, but it doesn’t get in the way at all. It’s double hinged so can be flipped up or down – it goes a little way beyond 90 degrees when flipped up and just short of 90 degrees when flipped down. The only drawback with this arrangement is that you can’t flip the screen forwards for shooting selfies. But that’s a minor complaint and, however you look at it, the COOLPIX L840’s articulated screen is a lot more versatile than the fixed screens of the Canon PowerShot SX410 IS and SX530 HS.

The screen itself is a 921k dot LCD panel which provides a very clear and detailed view. Even when in the docked position the COOLPIX L840’s screen looks much more detailed than the 230k dot fixed screen on the PowerShot SX410 IS or the 461k dot screen of the PowerShot SX530 HS. Outdoors in bright sunlight, though, the COOLPIX L840’s screen is no more visible than either of the PowerShots, except that you can angle it to keep the sun off – and that’s actually a big help.

On the right side of the COOLPIX L840 there’s a soft flap that covers the USB / A/V and HDMI ports. There’s also a DC in socket here that you can use to power the L840 from the mains using the optional EH-67 AC mains adapter. The combined battery and card compartment is accessed via a large hinged door that covers the entire right side of the camera below the hand grip. As before, the COOLPIX L840 takes four AA batteries, a set of Alkaline batteries is included in the box and they’ll provide enough power for 590 shots – a big increase on the 390 shots you get from the L830. But replace them with NiMH batteries and that figure goes up to 740 shots and with more expensive Lithium AA batteries the COOLPIX L840 manages a truly impressive 1240 shots.

The drawback with AAs is that there’s additional cost – not to mention weight – involved. If you don’t already have a set of four batteries and a charger you’ll need to buy them. But as well as the long battery life, AA’s have the advantage that they’re easy to replace and spares are relatively cheap compared to a proprietary Li-Ion battery.

The COOLPIX L840’s built-in pop-up flash is activated by pressing a button on the left of the body just below the flash housing. It has four modes, Auto, Auto with red-eye reduction, Fill flash and slow sync. The Flash has a quoted range of 6.5 meters, that’s a reduction from nine meters on the earlier model. I don’t think the flash has changed, it’s more likely Nikon is now quoting the range at 1600 ISO where it was 3200 ISO previously. That puts it roughly on a par with the power of the PowerShot SX530 HS and PowerShot SX410 IS. It provides enough light for fill-in and to illuminate reasonably close subjects, but unlike the PowerShots, the COOLPIX L840 doesn’t support a more powerful flash accessory.

Nikon COOLPIX L840 lens and stabilisation

The COOLPIX L840’s lens has been updated and now boasts a 38x range with an equivalent focal length of 22.5 to 855mm. It’s always good to see new models with an increased zoom range, the question is has Nikon increased it enough? The additional 4x is all at the telephoto end of the range so the 22.5mm wide angle remains as before and the maximum telephoto focal length increases from 765mm to 855mm equivalent.

The COOLPIX L840 has been leapfrogged by Canon’s PowerShot SX410 IS. Nikon has added 4x extra zoom, but Canon has managed a 10x increase with the SX410 IS. So where Nikon won the earlier contest with 34x vs 30x on the L830 and SX400 IS, now the tables are turned with 38x vs 40x.

Nikon COOLPIX L840 coverage, wide and tele

Above: Nikon COOLPIX L840 at 4mm (22.5mm) and at 152mm (855mm)

But there isn’t as big a difference between the 855mm maximum zoom on the COOLPIX L840 and the 960mm on the PowerShot SX410 IS as you might think. The table below shows just how much closer that extra 105mm on the SX410 IS will get you. To see a similar comparison between the COOLPIX L840 and the 1200mm maximum zoom on 50x PowerShot SX530 HS see my Canon PowerShot SX530 HS review.

Above left: Canon PowerShot SX410 HS at 172mm (960mm). Above right: Nikon COOLPIX L840 at 152mm (855mm).

Aside from the focal length, the other thing to consider is the maximum aperture. Shooting with a larger aperture allows you to use a faster shutter speed to freeze subject movement or in low light to avoid camera shake or lets you choose a lower ISO sensitivity setting to avoid excessive noise. On the face of it the COOLPIX L840, PowerShot SX410 IS and PowerShot SX530 HS have similar specifications with f3-6.5, f3.5-6.3 and f3.4-6.5 respectively.

The COOLPIX L840 is a little brighter at the wide angle end of the range, but the thing to bear in mind is that with auto exposure you can’t guarantee the camera will select the widest available aperture at any given focal length. With PASM exposure modes the PowerShot SX530 HS is the only model that will allow you do do that using Aperture priority mode.

One other thing to note is that when zoomed in to 855mm (the maximum on the L840) the SX530 HS can use f5.6 – nearly half a stop brighter than either the L840 or the SX410 IS. It’s not until it gets close to its 1200mm equivalent maximum zoom that it stops down to f6.5.

The COOLPIX L840 has optical image stabilisation which Nikon calls Vibration Reduction or VR. This moves the lens elements to compensate for camera movement and reduce the chances of blurred photos due to camera shake at slow shutter speeds.

Nikon COOLPIX L840 Image Stabiliser off / on

Above left: 100% crop, 4-152mm at 152mm, 1/50, 125 ISO, VR off. Above right: 100% crop, 4-152mm at 152mm, 1/50, 125 ISO, VR on.

Nikon has dropped the Hybrid VR mode which on the earlier L830 combined optical stabilisation with in-camera post processing to digitally remove any blurring that remained. Motion detection, which automatically raised the ISO sensitivity to enable selection of a faster shutter speed when either subject movement was detected or there was a risk of camera shake is also absent on the new model.

To test the COOLPIX L830’s stabilisation I set it to Auto exposure mode, zoomed the lens to its maximum telephoto setting and took a series of shots in deteriorating light at progressively slower shutter speeds, first with Vibration reduction set to off and then with it on. As you can see from the crops above, the COOLPIX L840 can produce blur-free shots down to 1/50th at the maximum zoom range, four stops slower than the photographer’s ‘one over the focal length’ dictum suggests is safe.

Nikon COOLPIX L840 movie modes

The COOLPIX L840 offers a comprehensive array of movie modes including a best quality 1080 / 25p / 30p HD mode. You can chose from 25 or 30fps frame rates in the the movie menu. Next on the movie mode menu is 1080 / 50i / 60i, followed by 720 / 25p / 30p and 480 / 25p / 30p. If you’re a fan of Apple’s edit-friendly iFrame mode you’ll be disappointed to see it dropped, but I expect you’ll be one of a small minority.

The COOLPIX L840 also has a couple of HS options for playback at speeds other than real time. HS 480/4x shoots 640×480 video at 4x normal speed (100 or 120fps depending on the frame rate selected) which plays back at quarter speed. HS 1080/0.5x records full HD video at half the normal frame rate for double-speed playback. But the earlier model’s HS720/2x option, which records 1280×720 at double the normal frame rate for half speed playback, has been dropped.

This looks like an across the board decision from Nikon which has done the same thing on its S9900 compact super-zoom and I think it’s a mistake. Slow motion HD video is a popular feature and to for Nikon to drop it while retaining the double speed option is, frankly, a bit baffling. About the only saving grace is that there’s a low resolution slomo mode. I should also point out that neither the PowerShot SX530 HS nor the SX410 IS has any kind of slow motion video feature.

Recording time is limited to fifteen seconds for the HS480/4x mode and two minutes for the HS 1080/0.5x. For standard speed movies the maximum recording time for a single movie clip is 35 minutes, even if there’s sufficient space on the card for more, and Nikon recommends using a speed class 6 or faster card for Full HD recording.

Like the PowerShot SX410 IS, the COOLPIX L840 is limited in terms of exposure control for movies, in other words, there isn’t any, other than exposure compensation. You can use the zoom while recording and thankfully, unlike on the earlier COOLPIX L830, if you disable the digital zoom in the set up menu it disables it for both movie recording and photos.

At this point you might be thinking that the COOLPIX L840’s movie features are ok, but basically the same as those on the earlier L830 minus a couple of slow motion modes. That would be true were it not for the introduction of the Short movie show feature. This works in a very similar way to the Hybrid movie mode (formerly Movie Digest) found on Canon compacts and records a set number of clips of a couple of seconds of 1080 / 25p / 30p footage which are then assembled in-camera into a longer movie.

You can choose between 15 2-second, three 10-second, or five 6-second clips which are then assembled into a 30-second movie. You can shoot 16:9 12 Megapixel Normal quality still photos when the mode dial is in the Short Movie Show mode position, or leave to shoot full resolution photos before returning to pick up where you left off. It’s a neat feature though not quite as user-friendly as Canon’s which shoots a short clip every time you take a photo.

Download the original file (Registered members of Vimeo only)

This clip, like the others below was shot in the COOLPIX L840’s best quality 1080 / 25p HD video mode. The COOLPIX L840 handles the exposure well and the image is contrasting and well saturated despite the rather flat lighting. Interestingly, the stabilisation doesn’t seem all that effective during the wide angle pan, but does keep things nice and steady when zoomed in. There’s a little bit of a distracting noise from the zoom motor, but on the whole this is a good result.

Download the original file (Registered members of Vimeo only)

For this second clip the camera was mounted on a tripod and the stabilisation was disabled. For this and the previous clip I set the movie AF mode to full-time AF and the results are fairly good though there are a couple of focus jitters during the pan and the AF takes a little while to sort things out at the end of the zoom.

Download the original file (Registered members of Vimeo only)

There’s quite a lot of noise in evidence in this low light pan which also looks a little over-exposed. It starts off well, but the COOLPIX L840 doesn’t really adjust the exposure much as I pan round to the darker area of the bar.

Download the original file (Registered members of Vimeo only)

For this clip I tested the COOLPIX L840’s full-time AF performance by zooming the lens in slightly and panning from the coffee cup on the table up to the bar and back again. Initially the COOLPIX L840 has a hard time locking focus on the coffee cup, it’s also pretty slow and hesitant to adjust the focus at the end of each pan. A so-so result that lacks the confidence and accuracy displayed by the PowerShot SX410 IS and 530 HS in the same test.

Nikon COOLPIX L840 shooting experience

The COOLPIX L840 is quick to start up; you can fire off a shot a little over a second after pressing the on/off button. That’s a big improvement on the comparatively laggardly L830. You can set an interval after which the L840 enters standby mode to conserve the battery; ordinarily you’d want to keep this fairly conservative – 30 seconds or a minute, but the L840’s battery life is so good that you can confidently set this to a longer period, say 5 minutes, without having to worry about prematurely draining the batteries. That means less chance of missing a shot waiting for it to wake up.

The COOLPIX L840 offers a choice of five AF area modes, more than is usual for a camera in this class, though there’s no manual focus option as on the PowerShot SX530 HS. Face priority works well when people are within a few metres of the camera in good light. If there are no faces in the frame it defaults to the nine-area AF system which it uses to focus on the subject closest to the camera. Alternatively you can manually select the focus area from one of 99 positions using the multi-selector to move the frame around a 9×11 grid, or set a central focus point. Manual positioning of the AF area is not something you’ll find on either of the Canon models and, while it’s not something you might make frequent use of, there are occasions, like when shooting with the camera on a tripod or for macro shots, when it can be very useful.

The COOLPIX L840 also includes target finding AF. This identifies subjects (objects as well as people) in the frame when the camera is pointed at a scene. Potential subjects are identified and tracked with green rectangles. If you’re shooting people, face recognition is probably a better choice of AF modes, but target finding does seem to have an uncanny knack for picking the main subject in a scene which for some situations is a real improvement on the nine-area AF mode.

Finally, there’s a subject tracking mode which allows you to identify a subject with an AF point which then follows it around. this works well, provided your subject is well separated from the background, you’re not zoomed in a long way and the subject isn’t moving quickly or erratically. When you’re fully zoomed in, the COOLPIX L840 can be slow to focus, it can spend more than a second adjusting the focus through the entire range and sometimes you need to make several attempts.

Though the 855mm maximum focal length isn’t especially long by super-zoom standards, it’s still long enough to make keeping your subject in the frame quite challenging. So the ‘Snap-back zoom’ button newly appeared on the COOLPIX L840’s lens barrel is a welcome introduction. This works in a similar way to the Framing Assist Seek button on the PowerShot SX530 HS, temporarily zooming out so you can re-acquire your subject, before zooming back in when you release the button. That’s as far as it goes with Snap-back Zoom on the COOLPIX L840, it lacks the depth and sophistication of Framing Assist on the SX530 HS, but it’s a useful feature to have nonetheless.

Above: 1/160, f6.5, 125 ISO, 152mm (855mm equivalent) Auto mode

The COOLPIX L840 has two full resolution continuous shooting modes at 7.4fps and 2fps. The faster of the two shoots a 7-frame burst, focus and exposure are fixed on the first frame for all continuous shooting modes. There are two faster settings that shoot 1280×960 images at 60fps and 640×480 at 120fps.

Gone are the Best Shot Selector and Multi-shot 16 modes of the earlier COOLPIX L830, replaced with the arguably more useful pre-shooting cache feature. This starts recording when the shutter is half pressed; when you fully press the shutter the COOLPIX L840 captures a burst of up to 25 1600×1200 frames at 15fps including up to 4 cached frames from before the shutter was fully depressed. It gives you a better chance of capturing a good sequence because you can wait a fraction longer before committing to a burst without risking losing crucial early frames.

I tested Continuous H shooting on the COOLPIX L840 using a speed class 10 card and with the camera set to the best quality fine JPEG mode and it actually managed 9fps – a fair bit quicker than the 7.4fps quoted. Though it’s a short burst that captures under a second of action, the COOLPIX L840 does at least provide a wealth of faster lower ressolution options for capturing longer sequences. All of which means you have a better chance of capturing action sequences than you do with the Canon PowerShot SX410 IS with its 0.5fps mode that barely qualifies for the description ‘continuous’.

Above: 1/160, f3, 125 ISO, 4.1mm (24mm equivalent) Auto, Macro

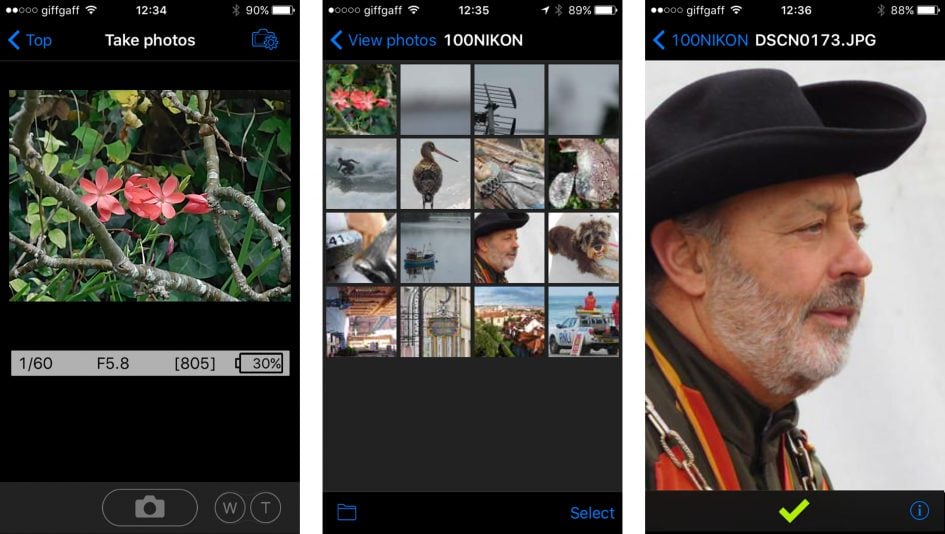

The COOLPIX L840 has built-in Wifi and a new NFC chip means that you can tap it with a suitably equipped smartphone to start a connection; if your phone has NFC you just tap it on the side of the camera (iPhones currently excepted). If you don’t have NFC all you need to do is enable Wifi from the menu then select the COOLPIX L840’s SSID on your phone. Before you do that, it’s advisable to set up security options on the camera, otherwise you’ve got an open Wifi connection that anyone can tap into.

You then have the option of remote shooting using Nikon’s Wireless Mobile Utility app as well as downloading images from the card in the camera. Regardless of the mode dial position the camera shoots in Auto mode and there’s no exposure control available, not even exposure compensation. You can zoom the lens in fairly stacato steps and there’s a noticeable lag between tapping the control on your phone and the camera responding.

The biggest drawback, though, is that you can’t tap your phone screen to set the AF area and focus using the app. The COOLPIX L840 sets the focus once you’ve pressed the remote shutter release button. This sometimes makes it difficult to see what’s in the frame and there’s no guarantee that the camera will focus on what you expect once you press the shutter. So yes, it’s remote shooting but, as with the PowerShot SX530 HS, this is about as basic as it gets.

Downloading images from the camera’s card to the phone is straightforward, You can view the images in a grid, get a full screen look at a low resolution preview and select it for download. However, you can no longer download photos at their original size – only VGA or what Nikon Calls ‘Recommended size’ which in my case turned out to be 1440×1080. It’s quicker to download smaller files to your phone and most of the time that’s what you’ll want to do, but it was nice to have the option to for original sized downloads and it’ a shame Nikon has decided to remove this feature..

Though it lacks the kind of exposure control provided by the PowerShot SX530 HS with it’s PASM modes, the COOLPIX L840 makes up for it with a good selection of feature shooting modes. Press the scene mode button on the rear panel and a menu offers five alternatives to Auto mode. The first one, Scene auto selector is really just a more sophisticated auto mode which uses scene detection to select an appropriate scene mode.

If you want to get a bit more hands on the next option down will allow you to select the scene mode yourself, with a choice of 18 at your fingertips including the usual subjects, like Portrait, Landscape, Sports, Beach and Sunset. There are also a couple of less familiar options.

Backlighting has two modes, the first of which uses fill-in flash to illuminate backlit subjects. The second is an HDR mode which takes a fast burst of shots and combines them in the camera to produce a result with better highlight and shadow detail than would be possible with a single exposure. Neither of the Canon PowerShots offers an HDR mode and this is a feature that will make a big difference to the quality of the results you’re able to obtain with the L840 in demanding lighting situations.

Backlighting HDR isn’t the only shooting mode the COOLPIX L840 can offer that the PowerShot SX410 IS and SX530 HS don’t. The next option down on the shooting mode menu is Easy panorama. There are two panorama modes for shooting 180 and 360 views. You press the shutter once and pan the camera as smoothly a you can. You can pan with the camera in portrait or landscape orientation – the COOLPIX L840 works out which, you don’t need to tell it beforehand. Portrait mode produces the largest images which are 1536 x 4800 pixels for 180 panoramas and the 360 ones measure 1536 x 9600.

Then there’s Moon and Bird-watching modes. Moon provides a selection of tint options which you can select on screen. More usefully, a small square in the middle of the frame indicates the area framed when fully zoomed in, so with the camera on a tripod you can frame the moon in the small square then press OK to automatically zoom the lens all the way in, theoretically at least. In practice, as anyone who has tried to shoot the moon wil know, framing is a tricky business. That said, the new Moon scene mode may be enough to encourage first timers to have a crack at lunar photography.

Birdwatching mode offers single shot and continuous modes, the later shooting a 7 frame burst in a second. Like Moon mode, it provides a frame guide and zooms in automatically, this time to 800mm. The arbitrary limit on the focal length is a bit of an imposition though and I reckon most bird photographers will be happier just to use program auto mode and set the set the drive mode to one of the continuous options.

Above left: COOLPIX L840 : Sepia. Above right: COOLPIX L840 : High contrast monochrome.

Above left: COOLPIX L840 : Selective colour. Above right: COOLPIX L840 : Toy camera effect 1.

Above: COOLPIX L840 : Mirror

The effects position provides a good range of filters including soft focus, sepia, cross process and selective colour, some of which you can see above. And finally there’s Smart portrait mode which you could easily overlook, but which provides a bundle of effects, filters and features aimed at helping you get the best from your portrait shots. These include skin softening and tinting, soft focus effect, self timers including smile activation and self-collage, which takes a sequence of shots and mounts them together photo-booth style.

Nikon's COOLPIX L840 is a DSLR-styled super-zoom camera with a surprisingly powerful feature-set for the money. You get 16 Megapixels, 1080p video, a tilting 3in screen and a 38x optical range equivalent to 22.5-855mm. It's also powered by AA batteries that may increase the weight, but at least a spare set is easy to get hold of. The major update over its best-selling predecessor is the inclusion of Wifi with NFC which makes it an even more compelling proposition, especially for the price. A great super-zoom camera at an entry-level price.

Nikon's COOLPIX L840 is a DSLR-styled super-zoom camera with a surprisingly powerful feature-set for the money. You get 16 Megapixels, 1080p video, a tilting 3in screen and a 38x optical range equivalent to 22.5-855mm. It's also powered by AA batteries that may increase the weight, but at least a spare set is easy to get hold of. The major update over its best-selling predecessor is the inclusion of Wifi with NFC which makes it an even more compelling proposition, especially for the price. A great super-zoom camera at an entry-level price.